Your codebase’s full structure, dependencies, configurations, and conventions become resolved context so agents reason from facts, not inferences

Agents stop reconstructing the repository every query and instead work faster with an authoritative understanding of the code, requiring fewer tokens

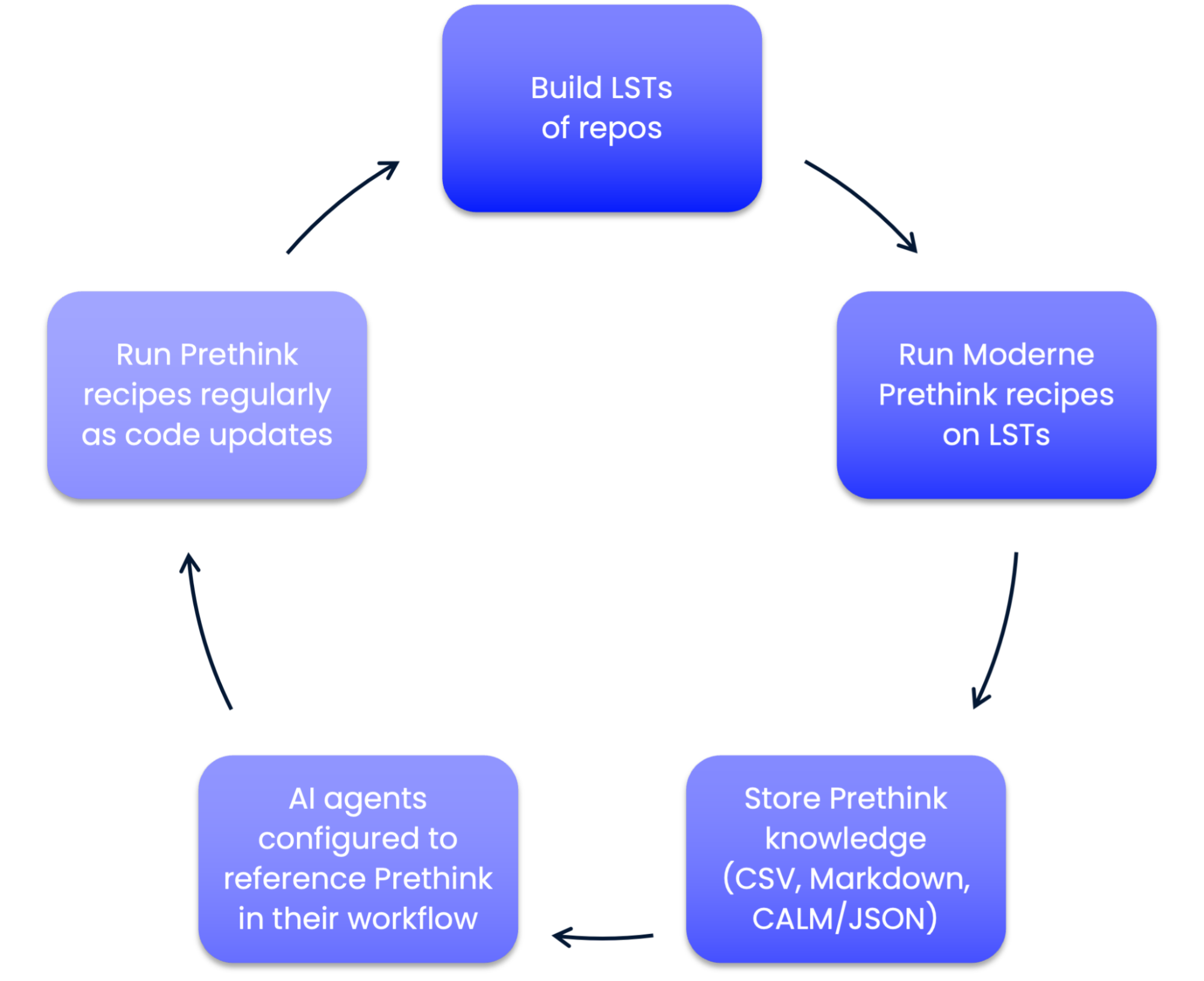

Knowledge is generated programmatically with Moderne recipes, so teams can customize Prethink output

Prethink is a structured, machine readable representation of how your codebase actually works, built directly from deep insights from your code. It captures relationships, dependencies, conventions, and architecture so agents can reason from resolved context instead of inferring from raw files and prompts.

Prethink builds a shared, system-level understanding of your codebase using deterministic analysis and customizable recipes, then configures your agents to reference that context directly.

Prethink models your codebase using Lossless Semantic Trees (LSTs) to capture all the semantic details creating a resolved view of how your codebase actually works.

Customizable Moderne recipes extract high-signal knowledge from LSTs and materialize it as durable, machine-readable context agents can rely on.

Prethink updates agent configuration so tools consult structured context first, giving agents a shared, up-to-date understanding without reconstructing context on every task.

Agents learn how code is meant to be written and organized in your environment, reducing rework caused by violating conventions or patterns.

Agents see resolved service endpoints, external calls, and integration points, making it easier to reason about impact and downstream effects of changes

Agents understand compatibility, risk, and upgrade impact across direct and transitive dependencies without relying on incomplete scans or guesswork.

Agents can reason about runtime behavior and security posture based on real configuration, not inferred assumptions

CALM-formatted architecture gives agents an explicit model of system structure, boundaries, and relationships.

Agents can see how code is validated and what tests cover, making it easier to propose safe changes and identify gaps with confidence.

When agents can’t see the whole story, they burn time and tokens piecing together context. Moderne Prethink gives them a shared understanding from the start.

Prethink eliminates repeated context reconstruction, cutting token usage and time spent across sessions and workflows

With real structure and relationships in context, agents hallucinate less and produce more reliable output.

Teams get predictable results because agents work from the same trusted context every time.

AI tools promise speed, but context is the bottleneck. Moderne Prethink changes how coding agents understand real-world codebases.

See how Prethink works under the hood and how to tailor context for your repositories and agent workflows.

The compiler-accurate, format-preserving code model that makes safe, automated modernization possible across thousands of repositories.

LLMs need more than raw source files to understand large codebases. Accurate context comes from building a resolved, system-level understanding of the code that captures structure, dependencies, and relationships across repositories. Prethink deterministically derives this context directly from the codebase and keeps it refreshed as the code changes, giving AI agents a shared, up-to-date view they can reason from without scanning millions of lines of text on every task.

Hallucinations happen when AI agents infer how a system works from incomplete or fragmented context. Minimizing them requires grounding agents in resolved, authoritative knowledge about the codebase, such as real dependencies, service boundaries, and configuration. When agents reason from verified structure instead of guessing, hallucinations drop and outputs become more reliable.

Raw code is text without meaning attached. On its own, it doesn’t convey resolved types, symbol relationships, architectural boundaries, or runtime behavior. LLMs have to infer these relationships from snippets, which leads to partial understanding and errors. Providing structured, resolved context gives AI agents the meaning behind the code, not just the characters on the page.

Semantic code context represents what code means, not just what it says. It includes resolved symbols, types, dependencies, relationships between components, and architectural structure. This allows AI agents to reason about behavior, impact, and constraints instead of stitching together guesses from raw text.

Sending large volumes of raw code to LLMs consumes tokens quickly and often has to be repeated across sessions and tasks. Agents spend tokens reconstructing context every time they work on a repository, which drives up inference costs. This cost grows rapidly in large, multi-repo systems.

Structured context lets agents start from a shared understanding of the codebase instead of rebuilding it on every interaction. When key relationships and structure are already resolved, agents need fewer tokens to build their understanding, which lowers overall LLM usage and cost.

Service boundaries are rarely obvious from individual files. Agents need explicit knowledge of endpoints, integrations, and how components interact. When this information is surfaced as structured context, agents can reason about impact and interactions across services instead of inferring boundaries from scattered code references.

Architecture diagrams built for humans are often visual and descriptive, but not machine-readable. Machine-readable architecture uses structured representations of components and relationships so AI agents can reason about system structure programmatically. This allows agents to understand boundaries, dependencies, and system topology in a way that can be queried and validated.

AI recommendations are grounded when agents reason from authoritative, up-to-date knowledge about how the system actually works. This means using resolved context derived from the codebase itself rather than relying on ad hoc prompts or inferred relationships. Grounded context reduces guesswork and makes outputs more trustworthy.