AI is transforming how organizations modernize code, but success depends less on the technology itself than on strategic, organizational, and deeply human factors.

Consider this: In their research on AI-driven migrations, Google reported achieving a 50% reduction in migration time with 80% of code modifications fully AI-authored. Yet DX's data shows some organizations using the same technology experience twice as many customer-facing incidents. The difference isn't the AI itself—it's everything around it.

A recent panel discussion brought together engineering leaders Rui Abreu from Meta, Stoyan Nikolov from Google, Laura Tacho from DX, and Jonathan Schneider from Moderne to explore why these outcomes diverge so sharply as organizations apply AI to code maintenance at scale. Across the discussion, a consistent pattern emerged: AI delivers value when it is embedded in mature engineering systems, measured against business outcomes, and constrained by social and organizational realities—not when it is treated as a standalone productivity tool.

That pattern helps explain why AI-driven code maintenance works best in organizations that have built the systems to support it. At companies like Google and Meta, those systems include dedicated platform teams, deep internal research, rich semantic code representations, and governance practices refined over years. These companies didn’t simply adopt AI; they operationalized it. For most enterprises, those conditions don’t exist, and relying on generic AI tools can introduce fragile changes and higher risk. The challenge isn’t whether AI can modernize code at scale—it’s how to do so safely without hyperscaler-level resources.

Three key themes emerged from the panel: how organizations are de-risking AI for code maintenance, how they prioritize and measure the success of AI-driven work, and how teams are adapting as AI reshapes roles, ownership, and review practices.

Watch the full panel video here, and read on below to catch the highlights.

De-risking AI for code maintenance

When people talk about AI and software development, they often focus on generating new services, prototypes, or entire applications from scratch. But for many organizations, the bigger opportunity lies in safely changing what already exists. As Stoyan put it, “The biggest challenge for us is actually working on the already ginormous and continuously evolving code base.”

At this scale, code maintenance becomes a continuous operational function rather than a series of discrete projects. Volume, consistency, and safety matter as much as correctness, and approaches that rely on bespoke human effort quickly become untenable. This shift requires rethinking how organizations talk about the work itself. Companies like Meta and Google are moving away from calling it 'technical debt’—a term that implies someone took a shortcut—and instead framing it as maintenance. It's an expected cost of operating complex systems, not a failure that needs defending.

Across the panel, there was strong alignment that AI’s biggest risk isn’t hallucination or bad syntax. It’s unchecked change at scale, which makes de-risking strategies essential.

AI amplifies what's already there

Laura emphasized that AI success depends on the system it enters: “If you have bottlenecks in code review or hesitancy for PRs coming from outside, AI is only going to make it worse.” DX’s Q4 AI Impact Report, drawing on data from over 135,000 developers across 425 organizations, shows starkly different outcomes. Some teams cut customer-facing incidents in half, while others see them double, depending on fundamentals like testing culture, incremental verification, SRE maturity, and review practices.

Both Meta and Google have developed risk-scoring systems to manage this. Meta's "diff risk score" quantifies the risk of each change, enabling low-risk updates to flow automatically while routing higher-risk changes through deeper review. Google reports similar approaches. In both cases, developers remain active evaluators, not rubber stamps, reinforcing that AI must be governed carefully, not deployed indiscriminately.

Measure outcomes, not activity

Another consistent message from the panel was a warning against optimizing for the wrong metrics. Across Google, Meta, DX, and Moderne, success wasn’t defined by how many pull requests AI produced or how much code it touched. Those are outputs. The teams seeing a durable impact were measuring outcomes.

At Meta, migration is framed as an enabler for business agility and innovation. Large codebases degrade over time, slowing development, increasing defects, and raising costs. Making the connection between modernization effort and business impact is how teams earn leadership buy-in. In that context, measurement isn’t about counting changes; it’s about demonstrating that modernization is reducing drag and restoring momentum.

Jonathan shared an example that illustrates what happens when teams measure too early in the delivery process. A banking organization tracked pull request merge rates as their primary metric. With AI assistance, more PRs were being issued and merge rates increased, which suggested the initiative was working. But when the team looked further downstream at deployments, releases weren’t increasing at all. The work was getting accepted, but it wasn’t getting shipped. The bottleneck had simply moved elsewhere in the delivery pipeline.

The lesson was clear: the closer a metric is to production and business impact, the more meaningful it becomes. Tracking deployments, not just merged code, matters. Measuring completed work means understanding whether changes actually flowed through the system and delivered value, not just whether they passed review.

Beyond flow metrics, the panel emphasized measuring the kind of work AI enables. Stoyan described success as unblocking work that was previously too costly or slow to attempt. One meaningful signal, he said, is “allowing non-experts to do things that before needed a lot of experts,” which ultimately “reduces significantly the latency of these things happening in the company.” In that framing, AI delivers the most value when it removes structural friction and shortens timelines, not when it merely accelerates existing tasks.

DX’s research reinforces this outcome-oriented framing. As Laura noted, “Time to complete the project is the number one kind of executive-facing metric on AI modernization projects.” Leaders care less about how many changes were made and more about how quickly modernization objectives were achieved—and whether that speed translated into reduced opportunity cost and faster delivery of business value.

The panel also stressed the importance of portfolio-level measurement. AI-driven modernization isn’t a single migration; it’s a program that unfolds across an application estate. Measuring modernization completeness across that estate provides a clearer signal of progress than isolated project metrics, especially as priorities inevitably shift.

Think horizontally for lasting impact

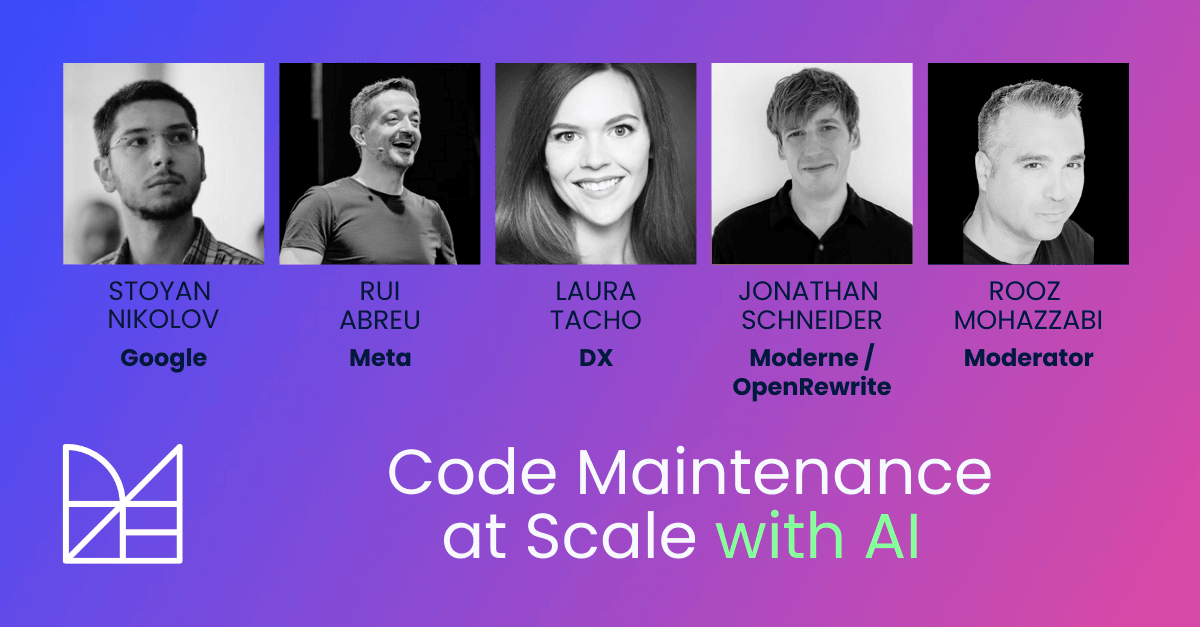

How teams prioritize the work is just as important as how they measure it. The panel described horizontal change not just as a migration technique but as a strategy for creating progress that survives shifting business goals. Jonathan shared an example that illustrates why this matters. A bank needed to remediate a vulnerability that required Java 17, but they were still on Java 8, running roughly 3,000 applications on WebSphere, which itself maxed out at Java 8. Every one of those applications also depended on WebSphere MQ.

A traditional vertical approach would take applications through the entire stack one at a time. If business priorities shifted after 100 applications were completed, the organization would be left managing two platforms at once. By contrast, a horizontal approach eliminated WebSphere MQ across all 3,000 applications first. Even if priorities changed, the organization had still removed a major constraint from the system. When the issue inevitably resurfaced, there was one less dependency standing in the way.

This way of thinking showed up repeatedly across the panel. Stoyan described the practical realities of large-scale change: transformations that touch thousands of files must be intentionally clustered and sequenced to be reviewable and to land. That execution constraint naturally favors cross-cutting, coordinated change over isolated, application-by-application efforts. From Meta’s perspective, Rui emphasized tying migration and refactoring decisions back to business outcomes, using modernization work to restore development velocity rather than treating it as a series of disconnected technical tasks.

One additional example underscored this point. Jonathan described a small insurance company that explicitly tagged every issue as either maintenance or a business feature. After adopting horizontal code transformation by leveraging Moderne, migration closures increased dramatically. But the real win was that business feature delivery jumped 30% that quarter. By reducing ongoing maintenance burden, the team recovered engineering capacity that translated directly into shipped features. Those outcome-based metrics also informed prioritization, favoring work that removed broad constraints rather than narrowly scoped improvements.

The hardest part is organizational, not technical

Social and cultural factors often determine success more than technical capabilities because they shape whether teams trust automation in the first place. AI changes not just who does the work, but who owns the outcome. Teams frequently react differently to the same change depending on its source, and understanding these human dynamics is as critical as getting the technical implementation right.

Balance trust with engagement. Google has found that trust must be carefully calibrated. As Stoyan explained, developers need enough confidence in AI-generated changes to avoid frustration with low-quality output, but not so much trust that they disengage and rubber-stamp reviews simply because “a bot did it.” Successful teams balance explanation, gradual rollout, and layered validation so developers remain informed, accountable, and engaged.

Who triggers the change matters. Jonathan has observed something surprising while working with customers: PR merge rates are significantly higher when teams trigger changes themselves, even when the underlying automation is identical. As he explains, “A pull request coming from an external team is seen as unwelcome advice coming from an in-law—you’re just looking for a reason to reject it.” This insight shaped Moderne’s approach: teams retain agency by initiating changes when they’re ready, even though the automation is the same.

Style preferences can block technically correct changes. Jonathan shares an example: "We had a team at one of our customers that entirely rejected a Java 17 upgrade on the basis of a pattern change they didn't like. They said, 'I just don't like this pattern and I don't want you to impose it upon me.'" It took an explanation that the change wasn't optional—the old constructor was gone—for resistance to melt. Having someone available to explain is crucial.

Ownership shifts from authorship to review. As AI takes on more modification work, reviewers become the accountable owners. Rui puts it plainly: “Going forward, the reviewer will be one of the key persons in software development, and not the author, because the author will be a machine.” This shift is already underway and requires new models of accountability that many organizations are still developing.

Taken together, these examples underscore that AI adoption is as much about redefining ownership as it is about improving efficiency. When reviewers become the accountable owners and teams retain agency over when and how changes are applied, AI shifts from an external force to an internal capability.

What successful organizations are doing differently

What separated successful adopters wasn’t experimentation, but execution: clear priorities, ownership, and a focus on outcomes over activity.

First, they get the fundamentals right. AI amplifies what's already there. Strong testing culture, effective code review processes, and solid deployment pipelines determine whether AI improves outcomes or makes problems worse.

Second, they account for social dynamics. Who triggers changes, how those changes are presented, and whether teams feel ownership affect adoption as much as technical quality. Organizations that succeed evolve their review practices, feedback loops, and governance models to match the new distribution of work and responsibility, rather than expecting tools alone to carry the change.

Finally, they measure business outcomes, not engineering activity. PR counts and code-generation volume can obscure real bottlenecks. Focus on business feature delivery, time to market, and recovered engineering capacity—the metrics that matter to the business.

Rui emphasized the strategic framing behind this approach: “We need to frame the migration as an enabler for business agility and innovation.” Meta’s research supports this view, showing how continual codebase improvement—from grassroots efforts to large-scale reengineering—helps sustain development velocity over time.

The technology exists, and the approaches have been proven at massive scale. What determines success is treating AI-driven code maintenance as a people-and-process challenge as much as a technical one.

Moderne’s perspective

The practices described in this panel are no longer theoretical. Google and Meta have demonstrated that AI-driven code maintenance can be safe, repeatable, and outcome-driven when it is built on deep code understanding, deterministic change, and disciplined review practices.

The gap for most enterprises isn’t ambition or intent. It’s that they don’t have internal research teams, custom LLMs, or years to build the infrastructure that makes these approaches work. That’s where Moderne focuses its effort. By grounding automation in Lossless Semantic Trees and deterministic transformation and review workflows, Moderne makes large-scale, horizontal code maintenance operational without requiring organizations to become AI research labs.

The result isn’t just faster upgrades. It’s a way to treat maintenance as a continuous capability rather than a recurring crisis, so modernization stops competing with innovation and starts enabling it.

Learn more about AI-driven modernization on your own terms with a Moderne demo.