AI coding assistants are now a familiar part of the developer workflow. Tools like Copilot, Claude Code, and Amazon Q can generate boilerplate code to expand upon, or even write whole applications with some guidance. With the ability to plan and complete complex tasks, they are helping software engineers do work they don’t want to do or might not otherwise be able to.

But when it comes to navigating complex codebases, assistants still fall short.

According to the latest State of the Developer Ecosystem report from JetBrains, one of the top concerns developers have about AI is its “limited understanding of complex code.” METR’s open-source development study from July 2025 backs this up, showing that AI-assisted developers were actually 19% slower on average than those working without AI.

It’s not that the models aren’t powerful—it’s that they need a better understanding of your codebase:

- They can’t see how dependencies are used across services

- They don’t reason about team-specific conventions or policies

- They lack access to architectural context, type graphs, and broader structure

- They are not always capable of debugging without human intervention

Sure, you could try to stuff more information into the context window, but even then, more isn’t better. Performance tends to decline as input length grows. Think of it like research: if you need to answer a question, it’s easier to find the answer in a well-written article than buried in a 500-page book. In the case of AI, this can also cause confusion and reduce accuracy.

That’s the real challenge in day-to-day development: AI assistants don’t just need more data, they need the right and the right-sized data. That’s why teams are turning to tools like Moddy, or building custom agents that connect models to trusted, structured insights from their codebase.

Beyond the prompt: Why tool calling changes the game

One of the biggest barriers limiting AI assistants from truly understanding complex code is the context gap. Even with the vast knowledge baked into a large-language model, AI assistants are still only as helpful as the real-time code data they can access and act on. Even the best models can hit hard limits:

- Context windows overflow when working across services

- Token costs rise with each file or doc injected

- Accuracy drops as noise increases

These inefficiencies can compound across workflows and cause the kind of slowdowns that show up in real-world performance. As the METR study found, AI tends to struggle most in large, complex repositories. This is especially true where developers already have deep familiarity with the codebase and where critical context is spread across millions of lines of code and years of history. In other words, assistants perform worse where they can’t access or simply don’t use the tacit knowledge embedded in mature systems.

Early techniques like retrieval-augmented generation (RAG) tried to help by injecting relevant files into the prompt. But this often ends up like handing the model an entire book and asking it to find a sentence. The information is there, but the assistant doesn’t always know what to do with it or where to look.

Tool calling changes that. Instead of handing the model a whole book, you give it just the paragraph that matters, already scoped, structured, and relevant. That might be a filtered Jira ticket list, a CI policy check, a config validation, or any other targeted insight the model can act on with confidence.

Protocols like Anthropic’s open-source Model Context Protocol (MCP) were developed to better enable this pattern. MCP standardizes how AI assistants can ask for help: they interpret a developer’s intent, make an API call to a connected tool, and receive a deterministic, trusted result in return.

Moddy: Structured access to your codebase

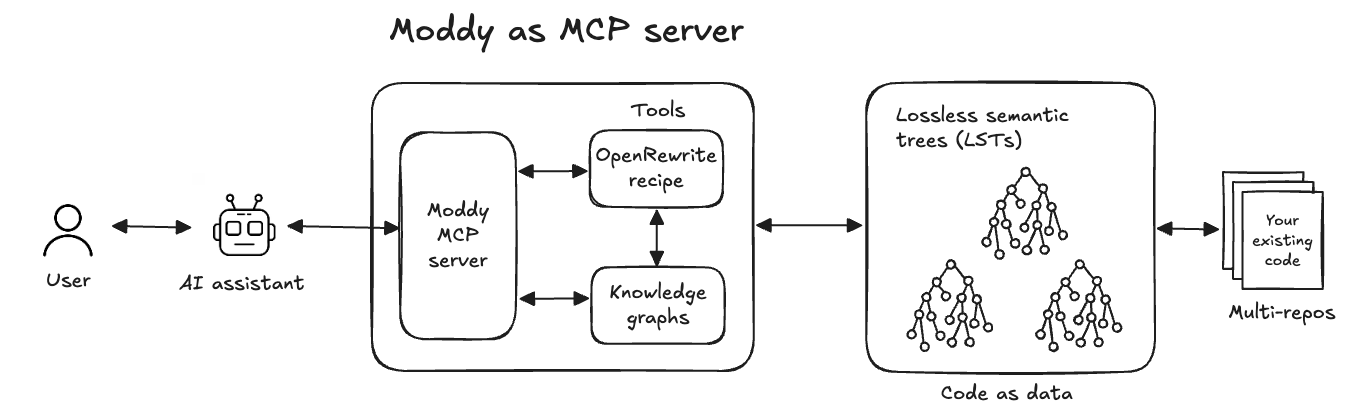

Moddy is Moderne’s AI agent purpose-built for large-scale codebases. Rather than acting on isolated files or one-off suggestions, Moddy leverages tool calling to scan for patterns, assess change impact, and run upgrades or remediations with deterministic precision across entire services and repositories.

It’s powered by the same capabilities behind the Moderne Platform and CLI:

- Lossless Semantic Trees (LSTs) that model code structure, types, and formatting

- OpenRewrite recipes acting as tools for data collection, migration, and policy enforcement

- Multi-repo orchestration that spans org structures, services, and teams

To bring this power closer to day-to-day development workflows, we created Moddy Desktop, a local version of the agent that runs directly on your machine. It still lets developers interact with their actual codebase using natural language prompts like “Where is this API used?” or “Upgrade all services to Spring Boot 3.5,” and receive structured answers and recommendations.

But you don’t have to use Moddy directly to benefit. Moddy Desktop includes a built-in Model Context Protocol (MCP) server, so AI assistants like Claude Code or Amazon Q can securely access the same context and tools through structured API calls.

Whether you prefer to chat directly with Moddy or integrate it into your assistant workflow, you get the same result: structured and accurate insight into your codebase, delivered by purpose-built tools that understand how enterprise code actually works.

Real-world workflows powered by Moddy

Architects, engineering leaders, platform teams, and developers can all use Moddy to bring structure and accuracy to AI-powered tasks.

Code search: Find and follow existing patterns

“Where are we already using Caffeine cache in our services?”

Moddy scans across your codebase to find structurally similar uses of a given API, pattern, or architectural construct—like how and where the Caffeine cache library is used—based on fully qualified type information, not just a string search. This type-aware, multi-repo LST analysis ensures matches are semantic, not just text-based string matches.

This gives developers real-world examples of not just whether the code is used, but how it is implemented across services, helping them make informed, consistent decisions. AI assistants can also use Moddy MCP to query these patterns and use them to generate consistent new code that aligns with existing patterns.

Impact analysis: Understand change ripple effects

"If I change this field or method, what else do I need to update?"

Suppose you’re renaming a field from zipCode to postalCode in a Java class. While the change appears straightforward, that field may be referenced in API responses, validation rules, tests, or serialization annotations like @JsonProperty("zipCode").

Moddy analyzes your codebase to surface all relevant usages (direct and indirect) across repositories. This ensures that developers (or an AI assistant) can assess the full scope of impact before making the change and avoid breaking critical dependencies.

Security remediation: Detect and fix vulnerabilities at scale

“Are we still using Log4j anywhere?” or “Scan for known CVEs.”

When a new CVE hits, like Log4Shell, security teams need to find and fix it fast. But vulnerable libraries aren’t always declared directly, and usage can be hard to trace. Moddy analyzes type-attributed LSTs and dependency metadata to identify both direct and transitive references. Instead of just matching on method names like Logger.getLogger(), it determines the actual type behind the call—such as Log4j vs. java.util.logging vs. something else —so it can pinpoint real vulnerabilities and filter out false positives.

Once identified, Moddy can also upgrade recipes to refactor usage, bump versions, and allow for producing PRs with tested, explainable changes. With Moddy MCP, assistants can automate this workflow—querying where a CVE exists, validating impact, and invoking the right fix.

Version upgrades: Migrate safely across the org

“I want to upgrade everything still using Java 8 to Java 17.”

Moddy can help organizations upgrade Java versions safely and systematically. It locates where older versions are still in use, assesses upgrade readiness, and flags breaking changes like deprecated and removed methods or incompatible libraries.

Developers get a clear, structured view of what needs to change and why, so they can assess impact and choose where to start. Some teams go incrementally (like Java 8 to 11 first, then to 17) while others may opt to upgrade all at once. Either way, Moddy identifies and curates the right set of OpenRewrite recipes to guide the process and reduce risk.

Assistants are only as smart as the data they can access

The best assistant in the world is only as helpful as the information behind it.

Whether you use Moddy, Claude Code, Amazon Q, or a custom agent, what matters is giving your assistant access to the right tools and data to bridge code and context. Moddy provides both the tooling and the context:

- LSTs for rich, compiler-accurate code structure

- Recipes for tested, repeatable changes

- Tool calling via UI or Moddy MCP for seamless orchestration

With tools providing scoped, pre-processed summaries instead of massive prompt stuffing, assistants exchange only what matters—lowering token usage and cost while improving precision. Fact-based, structured outputs mean less hallucination and more explainable results. Through the MCP framework, agents can collaborate cleanly across scanning, transforming, and validating tasks in a composable workflow that’s fully auditable and traceable.

OpenRewrite recipes are turning out to be ideal tools for this new generation of tool-capable LLMs that assistants can use to reason about code and make changes safely. And behind them, the LST gives the semantic context needed to make accurate decisions across files, repos, and teams.

Developers expect AI to accelerate them, not slow them down—and by plugging into Moddy’s tool layer, you can transform how development gets done.

Moddy Desktop is currently in exclusive beta. Contact us to learn more about what you can do with Moddy and Moderne.